Summary

- Visual Intelligence lets you scan your environment for related info, so long as you’ve got a compatible iPhone running the right version of iOS.

- Scanning text offers options like translations, location info, and the ability to generate events in Apple Calendar.

- You can also scan plants, animals, and inanimate objects. Extended info is available through options like ChatGPT and Google Search.

One of the main attractions of Apple Intelligence is a feature dubbed Visual Intelligence. It’s closely associated with the

iPhone 16

in Apple’s marketing, but don’t let that fool you — it also works on the

iPhone 16e

and iPhone 15 Pro. It’s just that those phones don’t have a dedicated

Camera Control

button available as a shortcut — you’ll have to customize the Action button or your lockscreen shortcuts instead.

Beyond that, enabling Visual Intelligence is as simple as updating to the latest version of iOS to ensure you’ve got maximum compatibility with devices and features. iOS 18.2 is the absolute minimum, but that only supports the iPhone 16 and 16 Pro. You’ll need iOS 18.4 or later if you’ve got an iPhone 16e or 15 Pro.

To use the tech, point your iPhone’s rear camera at a subject of interest, then trigger it — in the case of the 16 and 16 Pro, that means pressing and holding the Camera Control button. What you can do with Visual Intelligence depends on what you’re pointing it at, as I’ll explain below.

Apple iPhone 16 Pro

Apple’s iPhone 16 Pro line features a few notable upgrades over last year’s iPhone 15 Pro, including a dedicated camera button, a new A18 Pro chip, a bigger screen, and several AI-powered Apple Intelligence features.

Related

Is the iPhone 15 still worth buying in 2025? The answer is complicated

Possibly, but you shouldn’t get one straight from Apple.

1

Get info and options for businesses (and other locations)

It’s not just about restaurants

Apple

In supported countries — just the US, as of this writing — Visual Intelligence can scan signage at a location and pop up more information about it, such as its hours, services, reviews, and contact info. That data’s often already in front of you, but it may save you the trouble of having to walk over to read if you’re just passing by. And of course, contact info will let you jump straight to a website or phone call if you have further questions.

Some businesses even support placing reservations or delivery orders this way. Restaurants may also have food photos and sit-down menus available, which is probably the handiest thing, given that if you’re a few feet away from the door, you’re likely considering eating there and then. I could’ve certainly used this feature while I was living in Austin — not all barbeque is created equal.

Related

4 ways I make movie night at home better than the cinema

If you’re going to stay home, you can still do movies justice, but you’ll need the right tech.

The vacationer’s best friend

Kasa Fue / Creative Commons

Perhaps the best practical use of Visual Intelligence is translating signs, menus, and other text when you’re traveling abroad. All you have to do is scan the text, then tap Translate when the option appears. I could’ve used this feature when I visited Berlin and Munich a long time ago — while I knew a little German at the time, and understood it better in context, it wasn’t always easy to buy tickets or order from restaurants. I’d be totally dependent on Visual Intelligence if I visited Japan.

Perhaps the best practical use of Visual Intelligence is translating signs, menus, and other text when you’re traveling abroad.

In your own language, you can have text read aloud, or summarized if it’s especially long. That second feature is unlikely to be very useful unless you’re doing school work, but it’s good to have nevertheless. Much more essential is the ability to extract actionable data, such as dates, phone numbers, and email addresses, though you may have to hit the triple-dot icon to see all the possibilities.

3

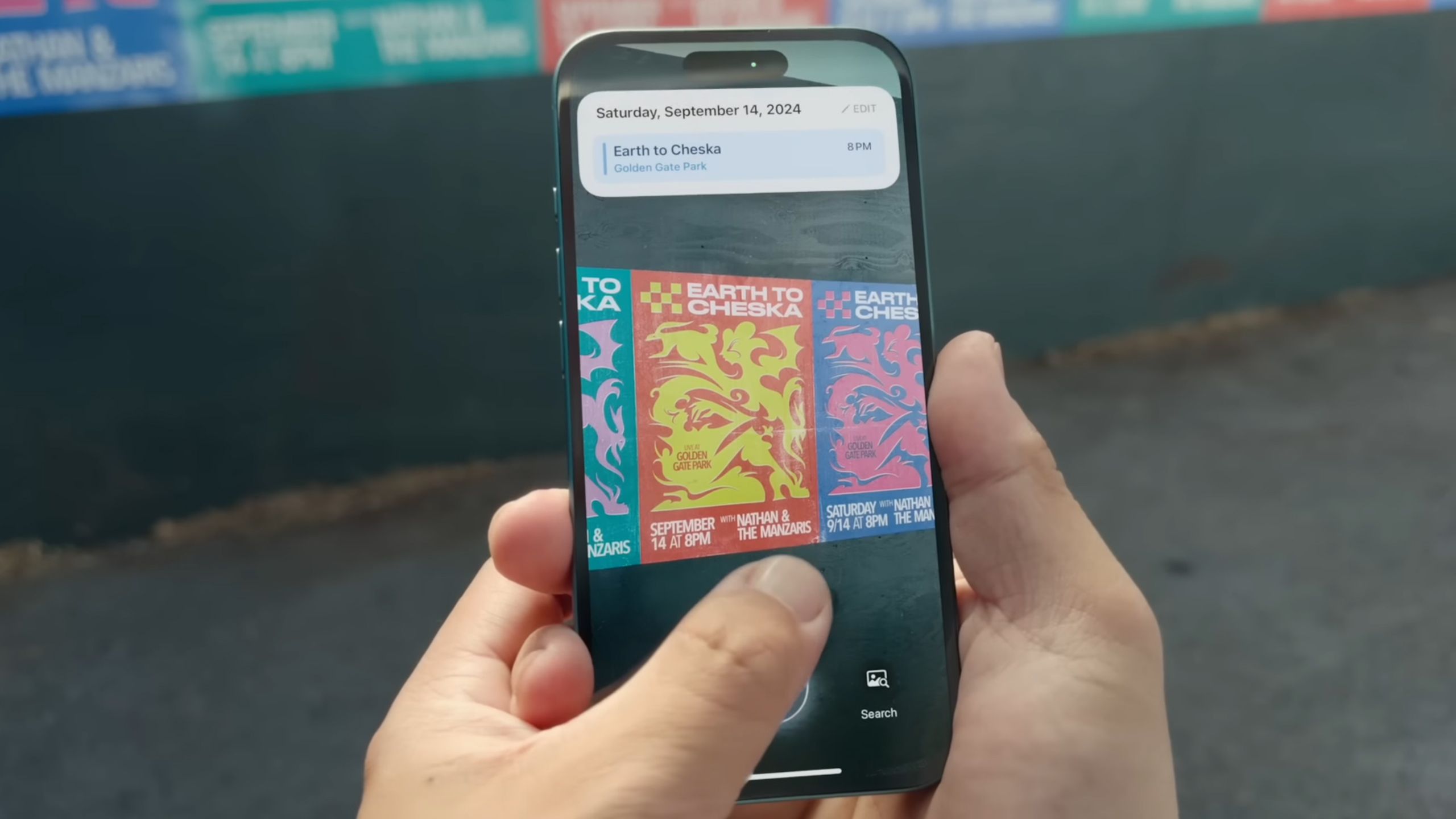

Create an Apple Calendar event

Never miss another show

Apple

Cities are often littered with posters and flyers for events like concerts, political rallies, and stand-up gigs. If you have trouble remembering the ones you want to go to, just scan something with a date (in the future only) and Visual Intelligence will offer up a Create Event button. In fact, this should work with just about anything with an upcoming month and day, but per Apple, it’s mostly intended for when you’re out on the town.

Be sure to double-check all the auto-generated details before tapping Add. There’s the potential for Visual Intelligence to misinterpret what it’s seeing, in which case you might end up with a confusing event name, or even the wrong place or time. Should you spot a mistake, tap Edit to manually adjust fields as necessary.

Related

Today I learned my iPhone has a free Ambient Music feature

It’s one of the more unusual features Apple’s brought to iPhones.

4

Get info about plants, animals, and other objects

You may need to be patient

Apple

If you point Visual Intelligence at a plant or animal, and it recognizes it as such, it should automatically pop up a relevant tab up top. In the case of a dog, for example, your iPhone should be able to tell what breed you’re looking at. Tap on the tab for more details.

You may need to have some luck while using this feature, though. While plants aren’t going anywhere, it’s often difficult to get close enough to an animal for a clear scan. Don’t risk scaring an animal and/or getting attacked just to satisfy your curiosity.

For inanimate objects and scenery, you’ll have to tap the Ask button (a speech bubble). It forwards image analysis to ChatGPT, and you should receive a description of what you’re looking at in a few seconds. Remember that for the best results, you’ll want to get as close as possible while keeping the subject in the center of the frame. That’s part of how Visual Intelligence decides what to analyze.

Related

Here’s how to stop glassholes from doxxing you in the street

Removing your photos from databases can help protect privacy, although they’ll still exist online.

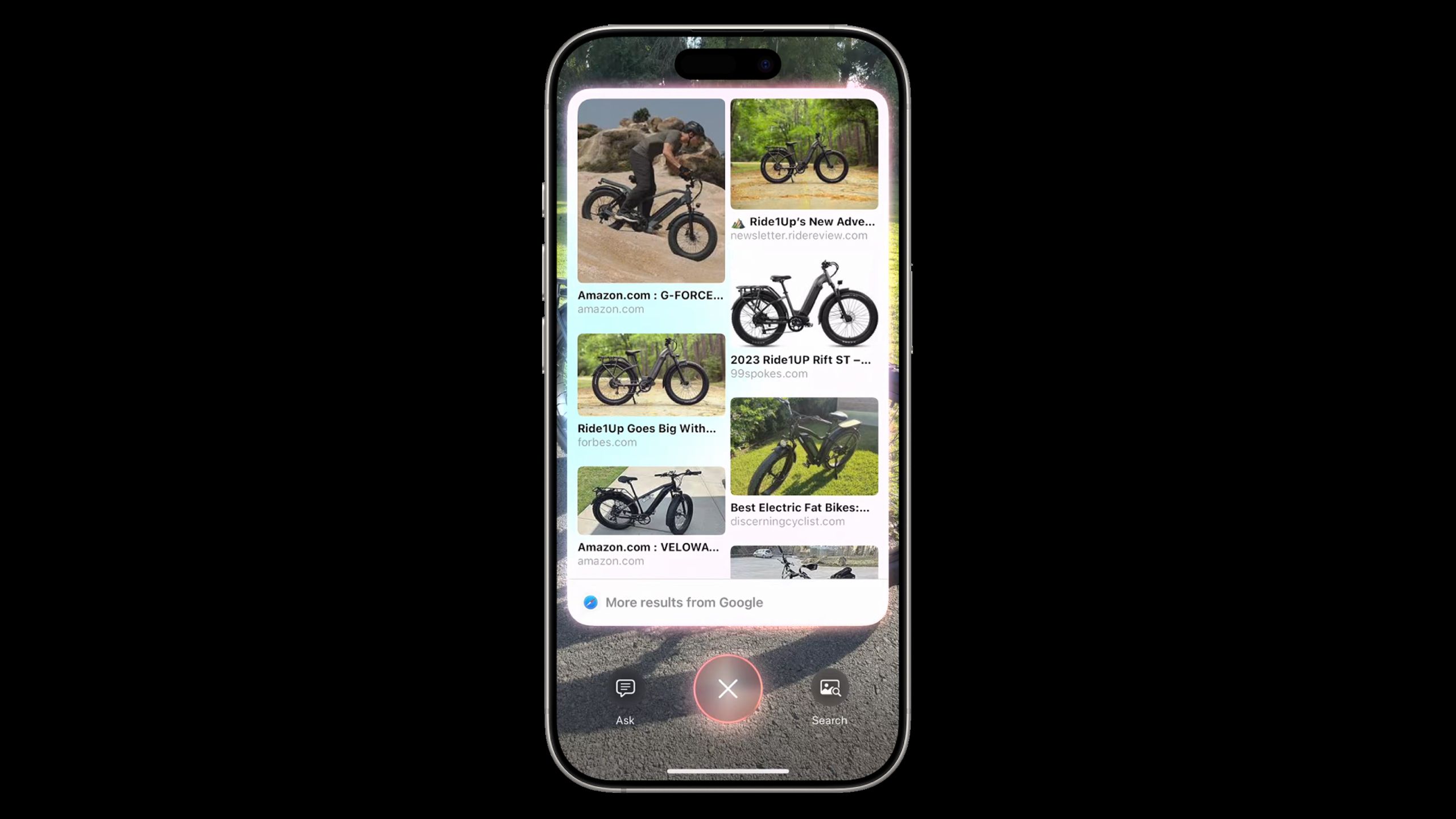

5

Search Google for similar images

Faster than Google itself

Apple

When you want more info than what either Apple or ChatGPT can provide, tapping the Search (photo/painting) icon will pop up results from Google Search. If you see something you’re interested in, tap on its thumbnail to visit the related web link. Apple gives the example of an e-bike — if you scan one, Google should show links to places where you can buy PEVs, hopefully including the bike you’re actually looking at.

Do your homework before buying anything on a whim.

To be clear, you can get similar results using Google Lens, which has been around for years. Visual Intelligence is simply faster than opening the Google app if you’ve got a compatible iPhone. Effectively, it’s Apple’s answer to Lens integration on Android devices.

Related

Everything you need to know about PEVs, or personal electric vehicles

You can use PEVs to explore, run errands, or speed up your commute.

Trending Products

AULA Keyboard, T102 104 Keys Gaming Keyboard and Mouse Combo with RGB Backlit Quiet Laptop Keyboard, All-Steel Panel, Waterproof Gentle Up PC Keyboard, USB Wired Keyboard for MAC Xbox PC Players

Acer Aspire 3 A315-24P-R7VH Slim Laptop computer | 15.6″ Full HD IPS Show | AMD Ryzen 3 7320U Quad-Core Processor | AMD Radeon Graphics | 8GB LPDDR5 | 128GB NVMe SSD | Wi-Fi 6 | Home windows 11 Residence in S Mode

Megaccel MATX PC Case, 6 ARGB Fans Pre-Installed, Type-C Gaming PC Case, 360mm Radiator Support, Tempered Glass Front & Side Panels, Mid Tower Black Micro ATX Computer Case (Not for ATX)

Wireless Keyboard and Mouse Combo, Lovaky 2.4G Full-Sized Ergonomic Keyboard Mouse, 3 DPI Adjustable Cordless USB Keyboard and Mouse, Quiet Click for Computer/Laptop/Windows/Mac (1 Pack, Black)

Lenovo Newest 15.6″ Laptop, Intel Pentium 4-core Processor, 15.6″ FHD Anti-Glare Display, Ethernet Port, HDMI, USB-C, WiFi & Bluetooth, Webcam (Windows 11 Home, 40GB RAM | 1TB SSD)

ASUS RT-AX5400 Twin Band WiFi 6 Extendable Router, Lifetime Web Safety Included, Immediate Guard, Superior Parental Controls, Constructed-in VPN, AiMesh Appropriate, Gaming & Streaming, Sensible Dwelling

AOC 22B2HM2 22″ Full HD (1920 x 1080) 100Hz LED Monitor, Adaptive Sync, VGA x1, HDMI x1, Flicker-Free, Low Blue Mild, HDR Prepared, VESA, Tilt Modify, Earphone Out, Eco-Pleasant

Logitech MK540 Superior Wi-fi Keyboard and Mouse Combo for Home windows, 2.4 GHz Unifying USB-Receiver, Multimedia Hotkeys, 3-12 months Battery Life, for PC, Laptop computer